[AI Alliance] AI Red-Teaming: Stress Testing AI Systems for Safety & Reliability

In this talk, we’ll explore the critical role of AI red-teaming in ensuring the safety, security, and reliability of AI systems. As AI adoption accelerates, adversarial testing has become essential to uncover vulnerabilities, mitigate risks, and build trustworthy models. We'll discuss practical red-teaming strategies for identifying model weaknesses, from prompt injection attacks to harmful content generation. Drawing on insights from HydroX AI’s automated red-teaming platform and our work with partners like Anthropic, I'll highlight best practices for developers, AI engineers, and business leaders to integrate red-teaming into their development lifecycle. The session will provide actionable guidance for both open-source projects and enterprise AI deployments.

About the presenter

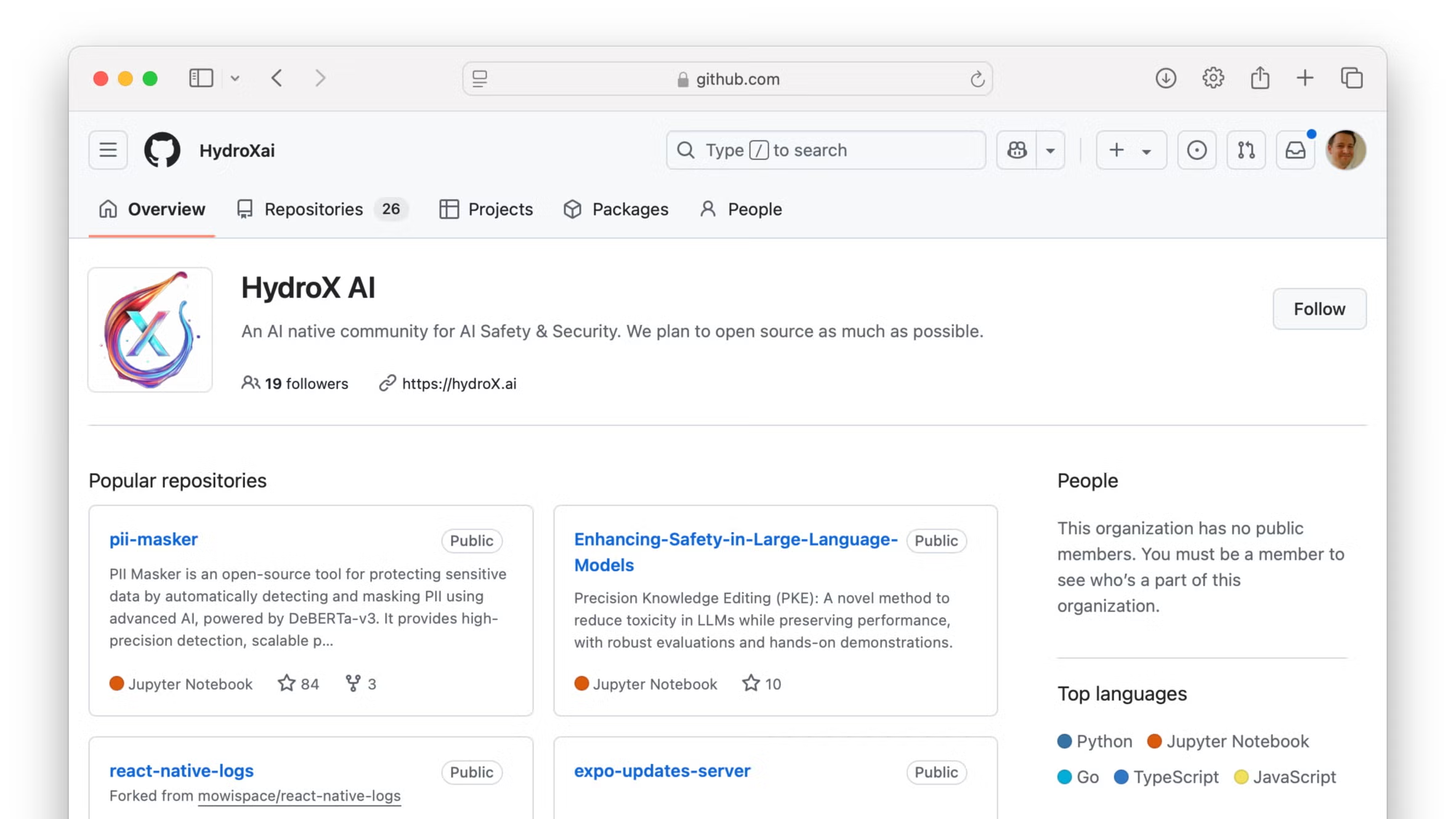

Victor Bian (COO, HydroX AI)

About the AI Alliance

The AI Alliance is an international community of researchers, developers and organizational leaders committed to support and enhance open innovation across the AI technology landscape to accelerate progress, improve safety, security and trust in AI, and maximize benefits to people and society everywhere. Members of the AI Alliance believe that open innovation is essential to develop and achieve safe and responsible AI that benefit society rather than benefit a select few big players.